The Rise of AI and High-Performance Computing

Artificial intelligence (AI) and machine learning require massive computational power. AI workloads, such as training large language models and processing big data, demand high-density, high-performance computing environments. Data center expansions are crucial to supporting these growing workloads.

As businesses continue shifting to cloud-based services, hyper-scale cloud providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud are expanding their infrastructure. At the same time, enterprises are investing in private and hybrid cloud solutions to balance security, scalability, and performance.

The data center industry is undergoing an unprecedented transformation. With massive investments from cloud giants like AWS, Microsoft, and Google, the future of digital infrastructure is being redefined. These projects are not just about increasing capacity—they are about improving energy efficiency, supporting AI advancements, and bringing data closer to users

As 2026 begins, these mega-projects will shape the next generation of cloud computing, AI-driven services, and edge technology. The digital world is only getting bigger, and data centers will continue to be the foundation that powers the future.

Data center equipment is sensitive to temperature, humidity, and other environmental factors. Failure to monitor these can lead to hardware failure, data loss, and costly downtime

Proactive monitoring is crucial for meeting service level agreements and maintaining business continuity

Monitoring helps identify hot spots and cold spots, allowing adjustments to cooling systems to run more efficiently, reducing power usage effectiveness

Industry has specific standards (e.g., ASHRAE guidelines) that require documented environmental controls for regulatory compliance

Early detection of smoke, water leaks, and other hazards protects investments and personnel

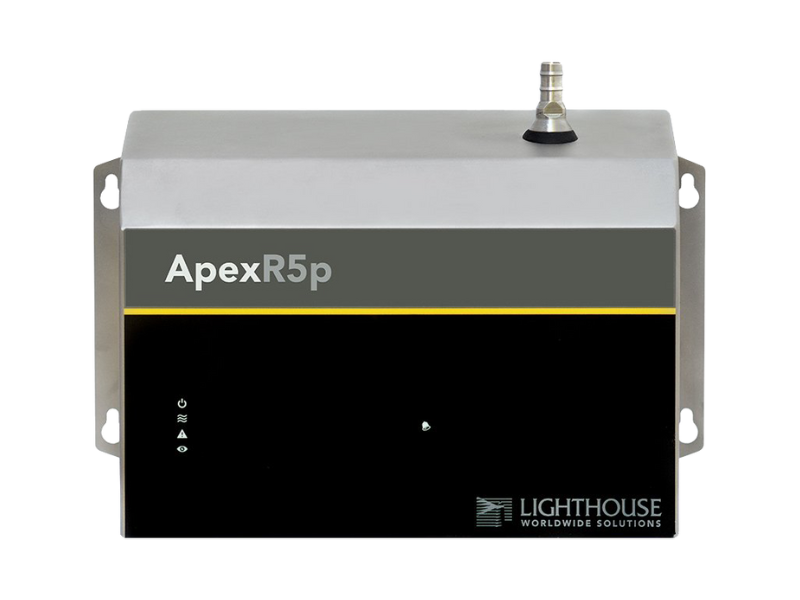

Airborne and Liquid Particle Counters are critical to data center reliability because they detect microscopic contaminants that can damage sensitive electronics and cooling infrastructure long before failures occur. Airborne particles such as dust, zinc whiskers, and corrosive residues can settle on circuit boards, disrupt airflow, cause short circuits, and cut equipment life in half if not controlled, directly impacting uptime, maintenance costs, and compliance with standards like ISO 14644-1.

As liquid cooling and direct‑to‑chip water systems become more common, liquid particle monitoring becomes equally important to prevent fouling, clogging, and erosion inside cold plates, heat exchangers, and narrow coolant channels. Even small numbers of particles in coolant lines can accumulate, restrict flow, create thermal hot spots, reduce heat transfer efficiency, and ultimately threaten GPU and server reliability, so fine-filtration and liquid particle counting are used as an early warning layer that protects both cooling performance and high‑density computing investments.

Liquid cooling hardware in data centers uses very tight passages inside cold plates, microchannels, and heat exchangers, so even modest particulate loading can start sticking, bridging, and building up on surfaces. Over time that buildup narrows channels, raises pressure drop, and starves sections of a plate, which shows up as thermal hot spots and unstable GPU or CPU temperatures.

Because particles often come from corrosion, make‑up water, or filter upset events, the system can go from “clean” to “trending bad” in hours, not months. Continuous liquid particle counting turns that into an early‑warning metric so operations teams can isolate a loop, swap filters, or service a corrosion source before hardware is exposed for long periods.

Lighthouse’s RLPC LE is built for continuous, 24/7 liquid particle monitoring, with measurement ranges covering 1.0–50 μm or 1.0–200 μm at a 30 ml/min flow, which lines up well with the particle sizes that cause plugging, fouling, and erosion in liquid cooling hardware. The sensor can report up to eight channels of simultaneous particle count data, so you can watch different size bands and tie specific alarm thresholds to “risk of plugging” versus “early erosion or corrosion signal.

In practice, that means you can: set baseline particle levels for “good” coolant, define warning and critical thresholds, and then let RLPC LE act as the early tripwire that protects both cooling performance and high‑density GPU investments. Over time, those trends help you optimize filter selection, replacement intervals, and chemistry so liquid cooling becomes predictable rather than a hidden risk for your compute clusters.

Equipment Protection: Even microscopic particles can accumulate on circuit boards and other components, causing overheating, interference, short circuits, and eventual equipment failure

Downtime Prevention: Contamination has been linked to significant IT downtime, which can be more costly than other disasters. Particle counters provide an early warning of potential contamination issues before they lead to serious problems

Standard Compliance: Leading organizations like the Uptime Institute and ASHRAE emphasize the need for clean environments. Data centers are typically recommended to meet at least ISO 14644-1 Class 8 cleanliness standards, which is verified using particle counters

Regular testing: Particle counters are used to perform routine tests to measure the concentration of airborne particles.

ISO compliance: Data centers should aim for an ISO Class 8 or higher, and particle counters are used to certify that this standard is met.

Risk assessment: They help detect abnormal trends in particle counts that may indicate a problem with filtration or cleaning protocols, prompting corrective action.

Data analysis: The data collected can be used to compare against industry standards and establish benchmarks for ongoing monitoring.

Regular Monitoring: While some facilities perform scheduled testing (e.g., annually), continuous monitoring offers an early warning system for sudden issues like a gap in a door seal or a malfunctioning air handler.

Strategic Placement: Portable or fixed-point remote counters can be used in different areas of the data center to measure particle levels effectively.

Integrated Approach: Particle counting should be part of a comprehensive contamination control strategy that also includes using high-quality air filters (e.g., MERV 11 or 13), employing proper cleaning practices (e.g., damp mopping), and controlling humidity levels.

For IT and data centers, Class A1 describes the allowable and recommended operating environment for enterprise servers and storage:

Typical equipment: Enterprise servers and storage in mission‑critical, tightly controlled data centers.

Recommended temperature (A1–A4 hardware): 18–27 °C (64.4–80.6 °F).

Allowable temperature (A1 only): 15–32 °C (59–89.6 °F).

Humidity envelope (A1): Dew point approximately from −12 °C with a minimum of about 8% RH up to a dew point around 17 °C, with relative humidity typically 20–80%.

This class assumes a data center with close control of temperature, humidity, and dew point to protect hardware and maintain reliability.

ASHRAE “Class A2” is a data center IT equipment class that allows a wider operating temperature range than A1 while using the same recommended inlet range of about 18–27 °C (64.4–80.6 °F).

Typical equipment: General IT/enterprise servers and storage that can tolerate warmer conditions than strict Class A1 environments.

Use case: Data centers or IT rooms with some environmental control, where running slightly warmer reduces cooling energy while staying within vendor specs.

Allowable temperature: 10–35 °C (50–95 °F) at the IT equipment inlet for Class A2 gear.

Humidity: Commonly specified at 20–80% RH, with a maximum dew point around 21 °C for A2.

ASHRAE “Class A3” is a data center IT equipment class that allows significantly warmer inlet air than A1 and A2, while still using roughly the same optimal “recommended” band as the other A‑classes.

Typical equipment: More robust IT gear (often workstations or PCs used as servers, or specially rated servers) that can tolerate higher temperatures than standard enterprise hardware.

Use case: Facilities that want to run warmer, use more economization/outside air, or accept higher short‑term excursions to reduce cooling energy.

Allowable temperature (A3): 5–40 °C (41–104 °F) at the IT equipment inlet.

Humidity: Maximum dew point about 24 °C with relative humidity roughly 8–85% for A3 equipment.

ASHRAE “Class A4” is the warmest of the standard data‑center IT equipment classes, allowing the broadest inlet temperature and humidity range while still sharing the same preferred “recommended” band as A1–A3.

Typical equipment: Very robust IT gear (often ruggedized or special‑purpose systems) designed to tolerate high operating temperatures compared with conventional enterprise servers.

Intent: Maximize ability to use outside‑air economization and minimize mechanical cooling by permitting very warm operation when needed.

Allowable temperature: 5–45 °C (41–113 °F) at the IT equipment inlet for Class A4 devices.

Humidity: Maximum dew point about 24 °C, with relative humidity typically allowed from roughly 8–90% for A4 equipment

ASHRAE “Class H1” is a newer data‑center IT equipment class intended for high‑density, air‑cooled systems that integrate very high‑power components like CPUs, GPUs/accelerators, and high‑speed networking.

High‑density focus: H1 is meant for tightly packed servers where there is limited space for larger heat sinks and fans, so components need a cooler and more tightly controlled air environment than standard A1–A4 gear.

Deployment pattern: H1 equipment is usually placed in dedicated “cold” zones or pods within a data center, with independent controls and cooling to maintain the narrower envelope.

Recommended temperature (H1): 18–22 °C (64.4–71.6 °F) at the server inlet.

Allowable temperature (H1): 15–25 °C (59–77 °F), which is notably tighter and cooler than the A1–A4 allowable ranges.

Humidity: Uses essentially the same moisture envelope logic as A‑class hardware; minimum is the higher of −12 °C dew point or 8% RH, and maximum dew point is about 17–18 °C depending on the specific chart.